Employees are putting sensitive data into public AI tools, and many organizations don’t have the controls to stop it. A new report from Kiteworks finds that most companies are missing basic safeguards to manage this data.

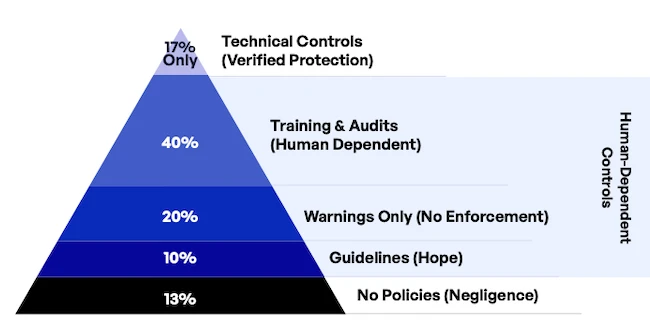

Security control maturity pyramid (Source: Kiteworks)

Organizations lack employee AI safeguards

Only 17% of companies have technology in place to block or scan uploads to public AI tools. The other 83% depend on training sessions, email warnings, or guidelines. Some have no policies at all.

Employees share customer records, financial results, and even credentials with chatbots or AI copilots, often from devices that security teams cannot monitor. Once this data enters an AI system, it cannot be pulled back. It may live in training models for years, accessible in ways the organization cannot predict.

The issue is compounded by overconfidence. One-third of executives believe their company tracks all AI usage, but only 9% actually have working governance systems. That gap between perception and reality leaves organizations blind to how much information employees expose.

The compliance problem

Regulators worldwide are moving fast on AI oversight. In 2024, U.S. agencies issued 59 new AI regulations, more than double the year before. Yet only 12% of companies list compliance violations as a top concern when it comes to AI.

The daily reality suggests far greater risk. GDPR requires records of all processing activities, but organizations cannot track what employees upload to chatbots. HIPAA demands audit trails for access to patient information, but shadow AI usage makes that impossible. Finance and public companies face the same issue with SOX and related controls.

In practice, most companies cannot answer basic questions such as which AI tools hold customer data or how to delete it if a regulator asks. Without visibility, every employee prompt to a chatbot could become a compliance failure.

Why it matters for CISOs

For CISOs, the findings point to two priorities. The first is technical control. Blocking uploads of sensitive data and scanning content before it reaches AI platforms should be treated as a baseline. Training employees helps, but the numbers show it cannot stand on its own.

The second is compliance. Regulators already expect AI governance and are issuing penalties. CISOs need to show that their organizations can see and control how data flows into AI systems.

“Whether it’s Middle East organisations with zero 24-hour detection, European companies with as little as 12% EU Data Act readiness, or APAC’s 35% who can’t assess AI risks, the root cause is always the same: Organisations can’t protect what they can’t see,” said Patrick Spencer, VP of Corporate Marketing and Research at Kiteworks.